The data pipeline: How the sausage gets made (Part 2)

This post comprises Part 2 of a 3-part series on data science in name, theory and practice.

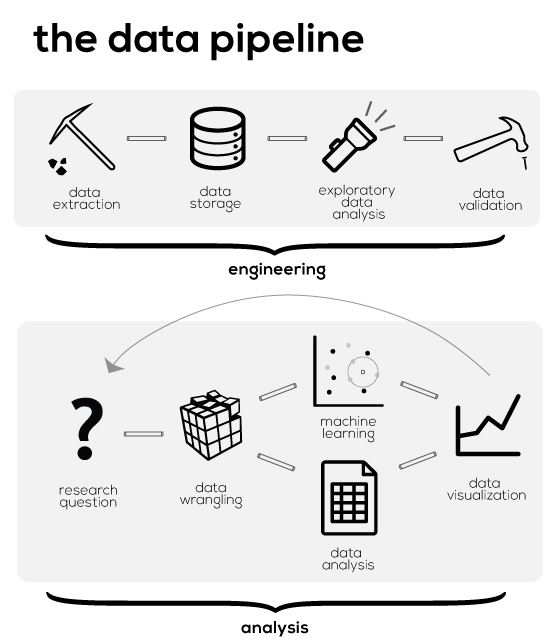

With all of today’s emphasis on big data and machine learning, it’s easy to overlook an important fact: data does not just appear out of nowhere - clean, structured and ready to be analyzed. In reality, data must first be gathered, interpreted, cleaned and transformed. In this post, I will outline these steps within framework I call “the data pipeline,” based on the flow of data from its extraction to its presentation.

The intended audience for this post are beginners entering the field of data science as an analyst or engineer. Before expanding on the steps, note that most of the software alluded to in this series will be related to Python because that is the language with which I am most familiar. Regardless, the takeaway here is the flow of data in theory; in Part 3, I will demonstrate this flow in practice.

1. Data extraction #

The first step to any analysis is getting the relevant data. Sometimes, you are provided the data by the client or can download it online in clean .csv files. More often, you have to get the data yourself. This can mean retrieving and consolidating it from diverse sources (eg. ERP, accounting, Google Analytics), scraping it from websites, interfacing with APIs or even streaming it from hardware. Once you are familiar with scraping data, a new realm of opportunities emerges: any data you see, you can scrape. (Disclaimer: you should never scrape resources prohibited by a website’s Terms of Service).

When it comes to pulling external data, two generalized software solutions I’ve found are import.io, a free SaaS designed to scrape structured data (eg. listings on eBay), and Google Sheets’ import_html() (eg. for a table on ESPN). If these don’t fit the bill, you will have to employ a programmatic solution.

The primary Python library for scraping your data is requests. When you visit a webpage, your browser sends an HTTP request to the web server with certain headers (eg. the page it wants, which browser it is), and sometimes cookies or a payload as well; the web server then replies with an HTTP response, most important of which is the webpage’s Document Object Model (DOM). The DOM is the code (HTML tags and all) that your browser uses to render the webpage you see. Requests mimics some of your browser’s basic functionality: you create an HTTP request, send it off to the web server and receive the webpage’s HTTP response. But because you used requests and not a browser, you get that response in code/text format (ie. not a rendered webpage), which is just the format you need it in to parse it.

Since you are scraping the data you first saw from your browser, it makes sense to copy the exact request your browser sent to the webpage. Some web servers won’t even let you access their pages unless it looks like the request was made from a browser. So head over to Chrome Developer Tools (F12 in Chrome) and click the Network tab to start “recording”. Go to any website and you’ll see all the requests your browser makes (eg. the page’s images, stylesheets, the DOM itself). It can sometimes be a game of guess-and-check to find the right request in a sea of other requests (if the webpage uses AJAX, you can look just at the XHR menu), but once you find it, you can copy the exact information your browser sent (under Headers) and paste it into the parameters requests will use.

Requests sends the HTTP request and receives the response, but that’s as far as it goes. So while you get the web page you were looking for (with the data within it), you get a bunch of HTML tags as well. This string of text represents unstructured data - there is no delineation between the information of interest and everything else, at least as far as your computer knows. To make sense of it, you need to structure it. When it comes to parsing the DOM, you should use BeautifulSoup. BeautifulSoup is an HTML parser that structures the DOM, allowing you to quickly isolate any set of elements and extract the data you need. For example, you can iterate over the rows in a table and store certain values in a list or dictionary.

Less commonly, your HTTP response won’t be the DOM but instead a JSON response. You should be familiar with data serialization formats (mainly JSON and XML), though it’s not really necessary to get too deep here. When it comes to .json, it behaves exactly like a Python dictionary so you just need to specify keys to get the values you’re interested in. Finally, if you need to scrape webpages that use JavaScript, you may need to simulate a browser even more by using something like selenium.

2. Data storage and data modeling #

Once you’ve scraped the data, you need to find a place to store it. If you primarily need to write a small amount of data and store it for later, you can stick to flat files, eg. comma- or tab-delimited text files. While convenient for write operations, flat files are unsuitable for read operations. Without iterating over each line in the file, how can you extract the 200th row? Flat files lack indexes, meaning you need to traverse each row/line until you find what you’re looking for.

For this reason, most large projects and enterprise-level applications are backed by databases. Databases come in many flavors, though the most common are relational (eg. Postgres, sqlite3, Oracle, SQL Server) and non-relational (eg. MongoDB, Redis, Cassandra). I won’t attempt to completely explain their differences, but I like to think that relational databases are used when you have neatly structured data. You specify the relationships between tables (eg. foreign keys), the constraints within tables (eg. column values must be unique or not null) and the type of data for each column. All of these must be known and specified in the database schema in advance. On the other hand, non-relational databases shine when it comes to unstructured data. You have data and you want to throw it into a database - you don’t want the database worrying about their relationships or constraints or data types, but rather how to quickly get you the data you’re looking for.

In my opinion, you should work with relational databases (ie. learning SQL) especially if you’re starting out. Analyzing unstructured data from a non-relational database is much harder precisely because your data lacks structure.

Finally, it’s worth knowing some commonly used database terminology (which is very hard to grasp unless you understand the big picture). In most enterprise settings, your data comes in as you conduct operations: you sell products, send emails, receive invoices, etc. This operational data compose transactions or events, which are recorded from various software sources (eg. ERP, website, payments platform, etc.). The data is consolidated into a highly normalized, online transaction processing (OLTP) schema with a focus on write performance (INSERT, UPDATE, DELETE). However, while this stores your data, it does not make it convenient to query (SELECT): for example, what were the top 10 products we sold in each city over the last 12 months? As a result, online analytical processing (OLAP) schemas evolved, which take this same operational data but optimize it for answering business questions. The process of going from OLTP to OLAP requires a process called extract-transform-load (ETL), with key business data ending up in a denormalized data warehouse. Data warehouses typically use a star schema or a snowflake schema, predicated on fact tables and dimension tables. Once your transactional data is in a data warehouse (again, just a periodic, transformed copy of your operational data), business intelligence (BI) tools, such as Looker, Tableau or Pentaho, connect to this data and simplify the process of answering business questions (eg. via query builders or visualization).

That was a mouthful! To summarize: operational data comes in and is written to an OLTP schema, then copied and transformed into an OLAP schema via a process called ETL, and finally analyzed using BI tools.

3. Exploratory data analysis #

Once your data is persistent (i.e. saved to disk and not memory), you need to know what’s in it. Exploratory data analysis (EDA) is the systematic process of learning about your data: its contents, quality, relationships and assumptions. When people refer to “mastering the data,” thorough EDA is an implicit assumption. Here are some important things to check for:

- Search for and utilize any associated documentation

- View all the columns (ie. even those which don’t immediately fit on your screen)

- Check data dimensions (ie. number of columns and rows; do the amounts make sense?)

- Know the timezone of timestamps

- Identify calculated fields (eg. a “conversion” column may be calculated from the columns “sessions” and “orders”)

- Determine units of measurement (eg. thousands or millions?)

- Check for null values (eg. -1, 999)

- Check for duplicate entries

- Verify character encoding set (Python’s chardet is useful here)

- Look for typos within manually entered data>

- Check column data types (eg. are they consistent?)

- Create a bar chart for categorical variables

- Use histograms to visualize data distributions instead of simple averages

- Compute descriptive statistics for numerical variables (eg. count, min, max, mean, quartiles)

- Create a line chart for time series (useful for identifying gaps, truncation or other anomalies in the time series)

I often use Python pandas for EDA because it simplifies many common tasks: df.head(); df.tail(); df.shape; df.info(); df.describe(); df.unique(); df.duplicated(); df.value_counts(); df.hist(); df.plot()

4. Data cleaning and validation #

Data cleaning uses the insights gleaned from EDA to remediate errors in your data. For example, once you determine that null values are recorded as “None”, you may convert these to the NULL data type. Or, you find outliers in your data which represent erroneous inputs - you may decide to drop these.

Here are some common data integrity issues:

- Fixing typos and standardizing categorical variables

- Adjusting for outliers

- Dropping duplicates

- Parsing strings for timestamp information

- Imputing/dropping null data

Whether you’re cleaning your data in Excel or a programming language, always remember to document your changes. This will considerably simplify retracing your steps when you or a colleague need to revisit the raw data.

5. Formulating the business/research questions #

Coming up with the right questions marks the starting point for data analyses. Unlike the previous steps, data analysis is closely modeled after the scientific method: ideate, test hypotheses and analyze results - then repeat. Coming up with the right questions heavily depends on a contextual understanding of the data - that is, domain knowledge.

This is where research comes in. Finding experts to speak with in person is likely the quickest and most thorough solution. Nevertheless, Google searches often suffice, if only to get a general understanding of important concepts. For example, what are the relevant key performance indicators (KPIs)? Or why does a certain variable exhibit weekly spikes? Or is the data obviously lacking certain information you’d expect it to have?

This step is a testament to the importance of EDA: the more of your data you understand, the more you can focus on the parts you don’t.

6. Data transformation #

Before you can answer any business question, you will likely need to transform your data. This is distinct from data cleaning, which is concerned with the integrity of the raw data. Here, rather, you need to transform your data into a format more amenable to analysis. Since analyses will vary depending on the question asked, the required data transformations will vary as well. As a result, it’s a good idea to keep a copy of your original, validated dataset, which you can go back to upon testing new hypotheses.

Some common data transformations are:

- Split-apply-combine operations (theory and pandas implementation), a.k.a. group-by and aggregations

- Calculating new columns as a function of other columns

- Changing the frequency of a time series (ie. upsampling and downsampling)

- SQL-like joins

- Wide-to-long or long-to-wide operations (ie. pivoting)

- Parsing strings with regular expressions

- Discretization (or binning) of numerical variables

- Subsetting/truncating portions of the data

- Normalization (eg. z-score, Box-Cox power transformations, percent changes)

- Creating dummy variables

- De-trending a time series and adjusting for seasonality to make it stationary

7. Data analysis #

Data analysis requires some degree of familiarity with statistics. This can range from topics like measures of central tendency and dispersion, to the conventionally taught frequentist statistics, all the way to the likes of machine learning and Bayesian statistics. For the vast majority of problems, intermediate statistics will provide satisfactory results. This is not to say that machine learning is not useful (quite the contrary), but rather, such sophisticated statistical methods are only worth investing in after intermediate statistics have been thoroughly exhausted. Differences in means, standard deviations and correlations, for example, can go a long way.

Excel is a great solution for data analysis on a small scale, even if you’re just using a sample of bigger data. For data too large for Excel but small enough to fit on your machine (eg. 2-4 GB), I would recommend analyzing your data using pandas. For large datasets, SQL or Business Intelligence (BI) tools are often used for analysis. Finally, for truly big data (eg. several TB), it makes sense to go with a distributed computing environment like Hadoop.

Data analyses for business problems commonly fall under:

- Descriptive statistics: information about the sample (eg. counts, totals, means, interquartile ranges, correlations)

- Inferential statistics: does the sample represent the population?

- Retrospective: regression analysis and classification with clearly specified models

- Prospective: machine learning (ie. predictive analytics) with training data, test data, and often black box models

8. Data visualization #

The final step within data analysis is visualization. Data visualization is so important that I feel comfortable using the blanket statement: “no data analysis is complete without a visualization.” No matter how comprehensive your tables may be, the fact remains that nobody likes expending extra effort to identify a trend from numbers. This is true regardless of your audience - even in a room full of rocket scientists, you will have fewer people internalizing your results if you use tables than if you use a visualization. Making a graph in Excel costs an extra 5-10 minutes; not making a graph can cost your audience’s entire focus.

Remember, tables and charts are not mutually exclusive! In all likelihood, you should use a table, as long as you keep it in the appendix.

The following are some chart types which may be useful to visualize your data:

- Histogram

- Bar chart

- Line chart

- Scatter plot

- Area charts

- Heat map

- Geographic map

- Hexbin plot

- Correlation tree

- Box plot

Sanity checking every step of the way #

Sanity checking doesn’t really follow a sequential order; rather, you should always be sanity checking your work. Without expressly including this in the data pipeline however, it’s easy to forget. For example, scraping the wrong data, or dropping useful data, or leaving in duplicate data, are all errors that can seriously compromise your results. Sanity checks are unique to each step: what you check for during analysis and during extraction are completely different.

Here are a few common sanity checks for some modules. The list is by no means exhaustive, and I hope to add more over time as I run into them.

Transformation #

- No additional or loss of null values after grouping/join operations

- Dataframe dimensions don’t deviate significantly from expectations

- Duplicates included in aggfuncs (eg. 2 identical rows included in a sum operation)

- Sum of some numeric value (eg. quantity, revenue) from original data matches that of transformed data

Analysis #

- Check your residual plots

- Data is normalized

- Time series is stationary

Visualization (more superficial) #

- Axes have names and units of measurement

- Labels are discernible

This wraps up some major topics in the field of data, from data extraction to analysis to presentation. In the next post, I’ll demonstrate the data pipeline from start to finish using Python.