Causality, causal opacity, plausible deniability, cherry-picking

Hey all -

+ mental models #

Causality #

In the last newsletter, I theorized that if someone (the agent) is doing something for you (the principal), they may have an interest in making it as complex as possible for you to understand. The less you know about how things truly work, the more you need to trust whatever they say.

As a result, agents have an incentive to maximize what economists call asymmetric information: the difference in information between them and you.

But I think a better term for this may actually be asymmetric knowledge. Which, of course, begs the question: what is the difference between information and knowledge?

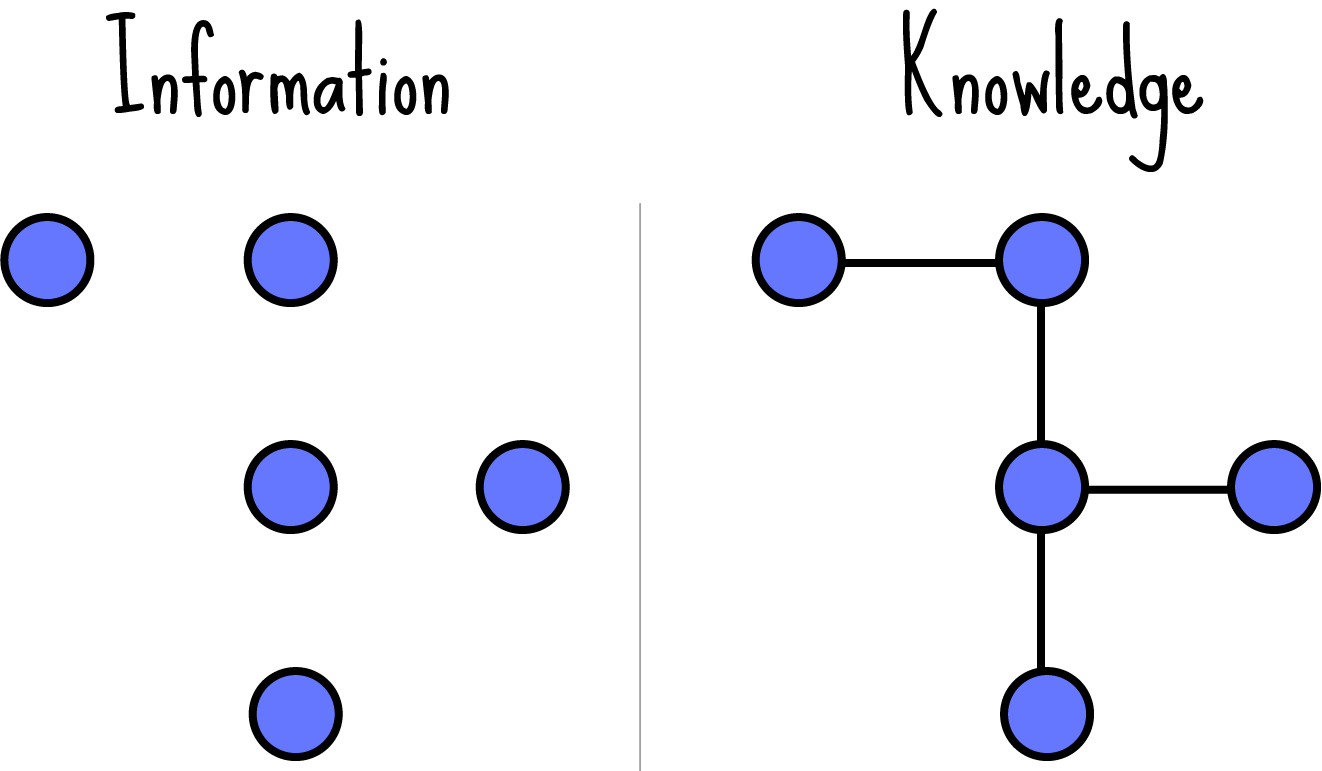

One way to think about this is by using a graph:

Here, the dots represent information: facts, evidence, observations. Information describes things as they are.

The lines “connecting the dots” represent knowledge: explanations, theories, models, frameworks. Knowledge describes the way things work.

Generally speaking, information alone is not particularly useful. “The chair is dark brown” is, in isolation, a vacuous statement. It attaches to nothing, occupies no greater context, serves no greater purpose. It just is.

On the other hand, “the chair is dark brown because it was just painted, so don’t sit on it” - now that is useful!

Knowledge chains together otherwise disparate pieces of information into causal relationships. “If it’s dark brown, it’s wet. If it’s wet, it was just painted. If it was just painted, don’t sit on it. If you do sit on it, you’ll get paint on your pants.”

Collectively, these causal relationships constitute a causal, explanatory model of the world. A useful one! An actionable one! Using this model, we can anticipate future states of the world, avoiding the ones we don’t like, and effecting the ones we do.

It’s precisely using models like these that we’re able to operate in the world and exercise some degree of control over our lives.

Causal opacity #

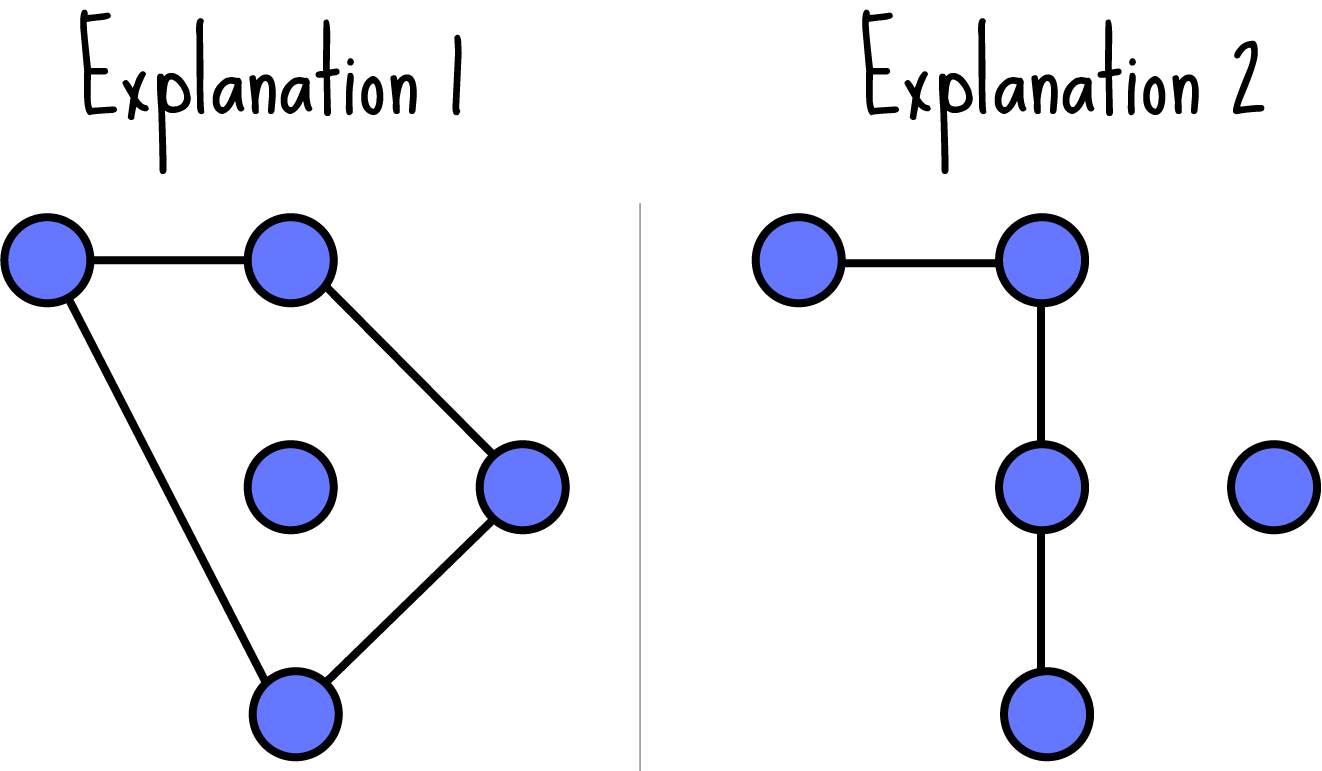

Now, there’s a problem with these explanatory models. If knowledge is the way we draw the lines between the dots, then we soon discover there are multiple ways of drawing the lines.

Two people can look at the same exact set of facts and come up with wildly different conclusions as to why we see those facts. They’re just connecting the dots differently!

I’ve alluded to this problem in a previous newsletter. Namely, there are always multiple alternative explanations for any set of outcomes you see.

Imagine you’re a business trying to evaluate the results of some advertising. It may have coincided with an increase in revenue, but what does that show? Perhaps:

- Causality: the advertising caused the increase in revenue

- Reverse causality: an increase in revenue caused the marketing team to start advertising

- Bicausality: the advertising increased revenue, which led to more advertising, which led to more revenue, and so on

- No causality: the advertising had no effect on revenue - their coincident increases were merely a statistical fluke

- Confounded causality: a new marketing director both increased advertising and revenue independently from other marketing initiatives

Lots of potential explanations here. The point is that direct causality - A leads to B - is not always so clear-cut in the real world. There are always alternative explanations which are, at least superficially, somewhat credible. There are always more ways to connect the dots.

In The Secret of Our Success, Joseph Henrich uses a great term for this problem. He calls it causal opacity.

In the complex world we live in, it’s sometimes very difficult to determine what actually caused what. Things have multiple causes, things happen by pure coincidence, things are part of feedback loops, things can be backwards.

In other words, we live in a constant state of causal opacity. There is always room for doubt, and by extension, always room for interpretation!

Plausible deniability #

The sheer abundance of causal opacity in the world leads to a sort of terrifying realization, which is that we have a lot more flexibility in redrawing the lines than we think. Any particular explanation is just one way of drawing the lines.

For example, if your boss reprimands you for coming into work late, you may propose some alternative explanations for your behavior:

- You didn’t know coming into work on time was so important

- Your child was sick this week, so there were extenuating circumstances

- You’ve been experimenting with a new schedule, and you’ve actually felt more productive as a result

- You’ve been on phone meetings in the morning, and as a result you haven’t actually been late at all

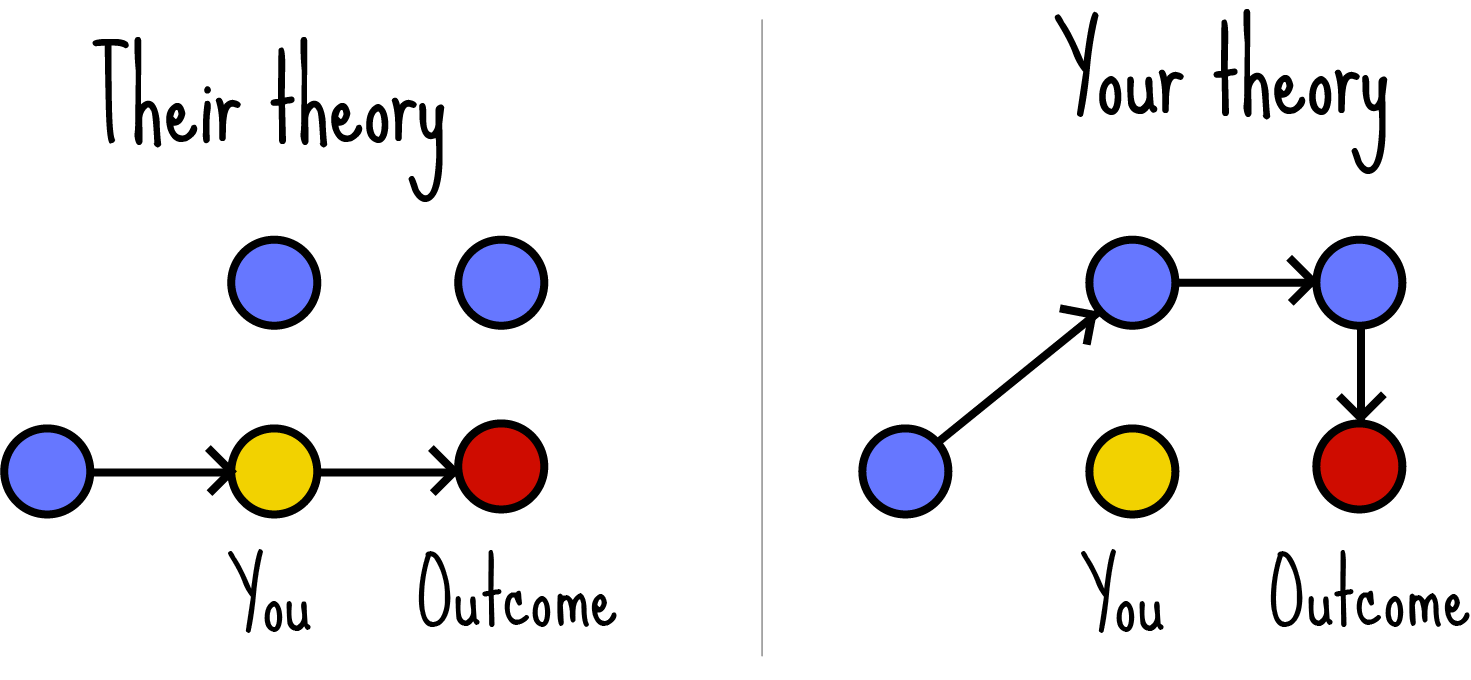

In every case, the goal is to suggest plausible, alternative explanations where you aren’t at fault, and delegitimize the ones where you are. When things go wrong, plausible deniability is about drawing the lines in such a way that they don’t go through you.

This of course can also work in reverse.

Cherry picking #

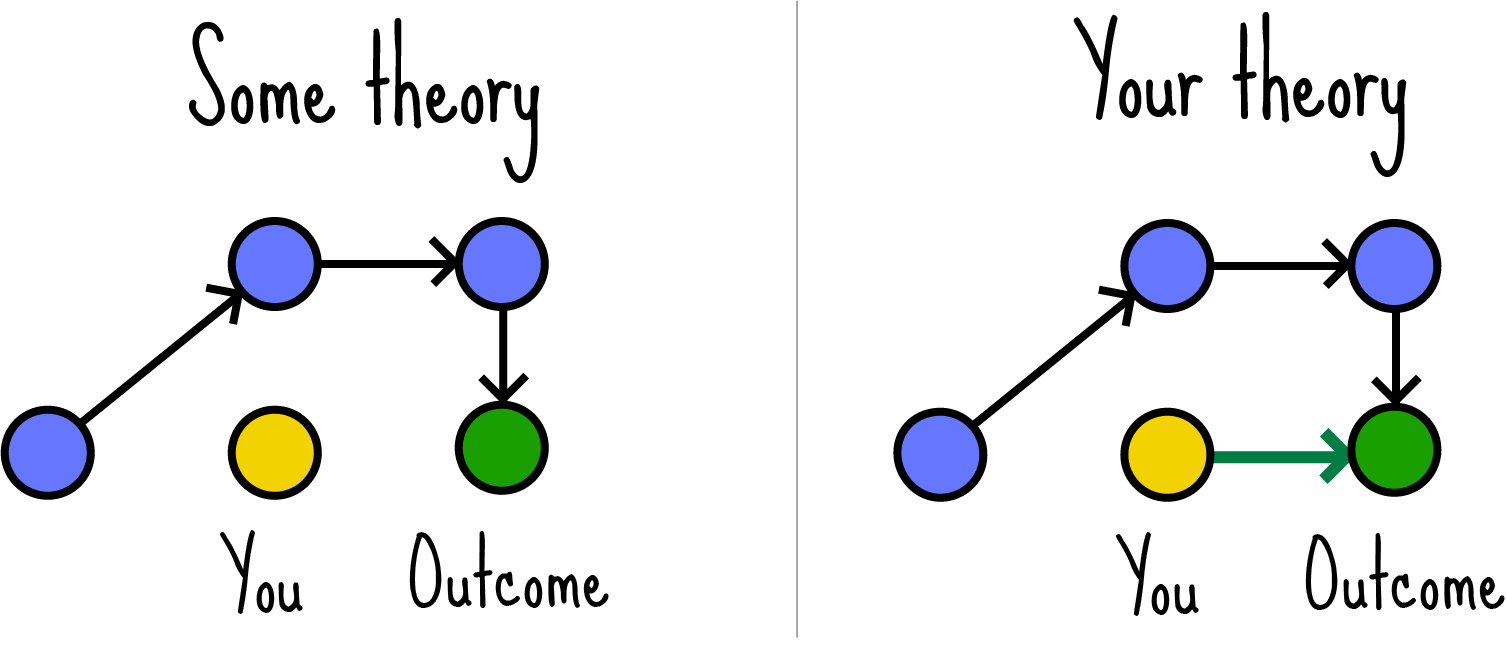

On the other hand, if the outcome is good, we may propose explanations which include us in the causal model. We want to draw the lines such that they go through us.

In other words, we may want to take credit for things we otherwise played little to no causal role in. Cherry-picking privileges certain explanations - namely the self-serving ones - at the expense of alternative theories which are equally plausible.

Like plausible deniability, cherry-picking is common in domains where there is a lot of causal opacity: politics, engineering, marketing, education … but who am I kidding, it is everywhere.

If there’s room for interpretation, then there is certainly room for charitable interpretation!

+ parting thoughts #

I know, I know, this paints a depressing picture about the state of - well, all of reality.

If there’s enough causal opacity, anyone who’s particularly creative and conniving can craft a self-serving explanation which is, unfortunately, plausible! That’s the whole point of causal opacity: many explanations are plausible.

So if you want to know how things actually work, and not just the self-serving explanations by clever rhetoricians, what can you do to identify the best explanations?

There is no silver bullet, but I like to keep a few of questions in mind:

- Who is drawing the map? Who is proposing the explanation? And who stands to benefit from it?

- Is the map overly simplistic? If so, can we think of alternative theories that would explain the same data?

- Is the map overly complex? If so, can we think which of the theories is most likely?

- Can we open the explanation up to peer review? Can multiple people see the evidence, propose their own explanations and identify which is most likely?

- Finally - and most importantly - can we put the theory to the test? Can we conduct a real scientific experiment?

One takeaway for me from all of this is that there is a lot more subjectivity in the explanations we see than we often realize. Explanations about how the world works aren’t ordained by some higher power, they are explained by people. People with interests and incentives, and people who are by their very nature subjective.

So the more we develop the skill of critically evaluating these explanations, the more we’ll approach a better understanding of how things actually work.

Thanks for reading,

Alex