Chesterton's fence, causality

Hey all -

+ what I learned or rediscovered recently #

Chesterton’s fence #

Whether it’s joining a new workplace, a new project team or a new volunteering organization, at one point or another, we’ve all likely expressed mild frustration at the way things are done. “Why don’t we just do this instead?” we wonder.

Because we see no apparent reason for the way things are done, we are quick to offer our improvements. Alas, we’ve stumbled across Chesterton’s fence: upon seeing a lone fence on an untraveled road, we see no apparent reason for it and consider taking it down. Seldom do we ask why the fence was put up in the first place.

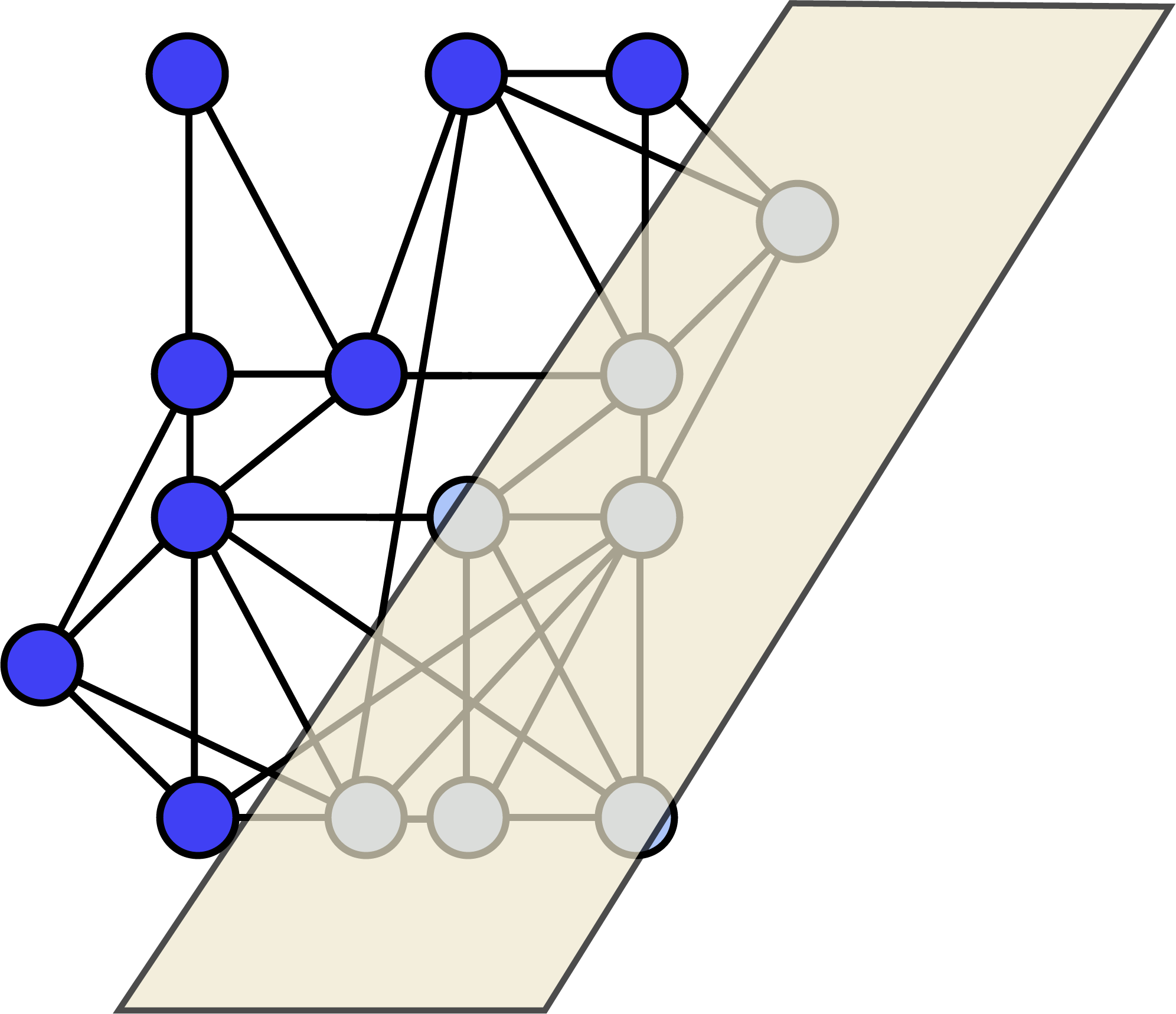

We can think of this situation more generally as an information problem. When we encounter a new system, we necessarily have incomplete information about it. We could say that the complete system is obscured from our view.

We don’t understand its history, its dependencies, its risks. We can’t see the larger context. And therefore we don’t understand how the system really works. As a result, when we offer suggestions on how to fix the system, we can’t fully anticipate the downstream consequences because they are necessarily obscured from view.

Chesterton’s fence reminds us to understand a system more completely before we seek to change it. We have to consider just how much remains out of view, and how best we can uncover it.

Often, we do this by talking to people, conducting our own research, and tinkering with the pieces before making any sweeping changes. Only after we’ve understood the system can we finally change it - and perhaps just as often - not change it.

Causality #

As humans, we’re always looking for causality because we want to know how things work. Ultimately, this knowledge enhances our survival and helps us live better lives.

The problem is that causality can be surprisingly hard to pin down. That’s because we often don’t directly observe causality, we only observe correlation.

For example, if I say, “if you work hard, you’ll succeed,” that is a causal statement. Observably, we see a relationship between hard work and success, and so we draw the conclusion that hard work causes success.

Of course, there are many other explanations that satisfy this apparent relationship.

Perhaps there are other factors - called “confounding variables” - which also contribute to success. Examples here include luck, relationships, intelligence and so on.

Perhaps there is no relationship at all: working hard doesn’t actually impact your chance at success, but by mere coincidence, successful people happen to work hard. That would be a “spurious correlation.”

Perhaps the causality is even reversed: success causes people to work harder because that’s what society expects of them.

Now, you’ll notice that not all of these explanations are equally likely. We identify a causal relationship by selecting the most likely explanation for the relationship we observe.

But this should at least suggest that there are many ways of explaining what we observe. The first, most basic, and even most intuitive explanation may not always be the right one. Knowing the various ways the in which correlations can be explained can help us separate fact from fiction.

Thanks for reading,

Alex