Optimization, hill climbing, simulated annealing, the explore/exploit tradeoff

Hey all -

+ what I learned or rediscovered recently #

Optimization #

Optimization is a favorite topic of mine because optimization is everywhere. When you go to the grocery store to buy food, you’re optimizing. When you decide where to go on vacation, you’re optimizing. When you choose what movie to watch, you’re optimizing. You get the point: every decision you make is in some shape or form an optimization.

So what is optimization? It’s the process of moving toward a better outcome or away from a worse outcome. If there exist better and worse outcomes at all, then there too exists optimization.

Let’s take an example. When you’re shopping for groceries, there is some combination of factors that best achieve what you want - maybe it’s minimizing cost, minimizing time spent and maximizing quality of ingredients. Perhaps there are a couple of hard constraints in there too, like dietary restrictions or having enough food for a few days.

We can then imagine a basket of factors. Some combinations of factors will contribute to better shopping experiences, while others, worse experiences. Spending a lot of money on poor-quality ingredients is clearly a worse outcome.

Now, very often, we don’t just know which combination will generate the best outcome. Maybe spending more would allow us to get really high-quality products - which may matter a lot - but we’re no longer minimizing cost as effectively.

As a result, we have to search for the best combination of factors. We may make incremental tweaks to our first shopping experience, gradually improving each subsequent one over time. Or, we may shop radically differently each time, hoping to stumble upon the “perfect” way. The way in which we search - that methodology - that’s an algorithm.

There are many different algorithms we could use, each with different tradeoffs.

But before we explore a couple of them, I want to emphasize the big picture here: if you care about making good decisions, you should care to some degree about optimization. Optimization theory not only tells us that there is a direction we can travel toward better decisions, but also why we may choose one particular direction over another[1].

Hill climbing #

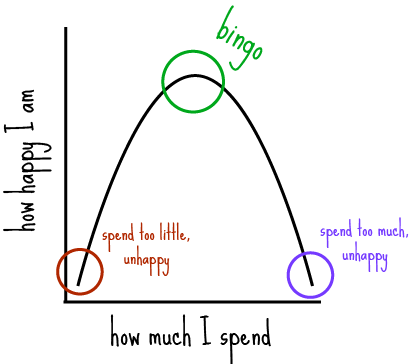

When people talk about optimization, I imagine (though I’m no expert) they’re often visualizing something like this:

In other words, there is some optimal point at which we do not do too much nor too little of whatever the input is - like how much we spend on groceries - but rather some amount that’s justtttt right.

Notice how that looks like a hill. We could be randomly plopped anywhere on the hill, and to get the optimal point, all we have to do is make sure we travel up. Climb the hill.

This is how most of us live our daily lives. We make decisions, see what the outcome is, and then tweak our decisions for next time so that we make better ones. Incremental improvements.

Hill climbing is an example of a local search algorithm. Well then, what’s a global search algorithm?

Simulated annealing #

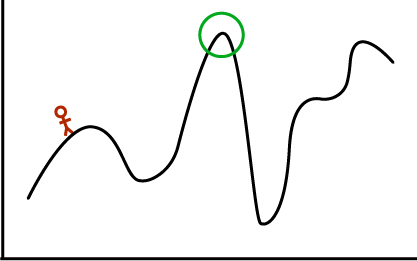

Let’s say you’re trying to get to the very top of the mountain and you’re using the hill climbing algorithm. So you climb and climb and climb - until there is no more up.

Are we done? Not necessarily.

Maybe you chose the wrong hill to start on. Maybe we have to go down to go back up.

If we only climb hills, we may get stuck on a local maximum. We need a better algorithm to find the one and only global maximum. We need a global search algorithm.

One of those algorithms is called simulated annealing. Here, we slightly augment the hill climbing strategy: now we add random jumps around the entire possibility space. Are they higher than the hill we’re on? If so, switch hills. If not, continue up.

How does this apply to real life?

Well if we care about making better decisions, we will try and find local maxima by slightly tweaking where we are now. But notice how we may get stuck on the wrong hill!

If we want to truly make the best decisions, then sometimes we have to radically shake things up. We have to jump hills.

The explore/exploit tradeoff #

Now for the last concept. Nothing is free, and neither is search. Searching may cost money or energy - but at very least, searching costs time.

Given finite time, should we stay on the hill we’re on (hill climbing), or jump hills (simulated annealing)? In other words, do we exploit the current opportunity - what we know to be pretty good? Or do we explore other opportunities - which could possibly be better?

This is the explore/exploit tradeoff. Stripped of the jargon, this is a very familiar dilemma. Do you go out to the restaurant you love, or do you try a completely new one? Do you revisit your favorite travel destination, or explore anew? Decisions, decisions.

We can be pretty sure that we shouldn’t spend all of our time exploiting, nor should we spend all of our time exploring. There needs to be some balance.

According to the book Algorithm to Live By, author Brian Christian makes a bold claim: the answer is 37%. We should spend the first 37% of our entire time exploring, then the last 63% exploiting the best opportunities we found.

There are many nuances to this claim, so you have to read the book (it’s excellent), but the overarching point remains: given finite time, we must always consider whether we should exploit the hill we’re on, or explore other hills. This goes for climbing actual hills, but also for business or friendships or relationships or - well, you get the point.

Thanks for reading,

Alex

[1] There’s a good discussion to be had about over-optimizing - namely relating to “antifragility” - which I’ll talk about in a later newsletter.